James Fox, Cyber Security Operations Consultant | 11 April 2024

Monitoring identity providers for suspicious sign-in activity is the bread and butter of security operations in the cloud. With more than 77% of attacks seeing compromised credentials as an initial access method (Sophos, 2024) it is incredibly important that cloud IDPs such as Microsoft Entra ID are monitored closely for signs of account compromise, and when identified, a range of responses are made available. Despite this imperative, it can be a struggle for security operations analysts to come to high-confidence determinations on if a suspicious sign-in warrants action or not. Often a compromised account only becomes apparent given later-stage threat activity, in part, due to an unfortunately necessary reliance on anomaly-based detections in monitoring sign-in activity. These anomaly based alerts provide little context to an analyst to determine with confidence that an account has been compromised or not and can be exceptionally noisy depending on how “mobile” the organisation is (cough cough BYOD, overseas or remote workers, and 3rd party contractors). The result of is are wishy-washy alert triages that run the risk of an over or under reaction.

By far and away the most efficient strategy to limit uncertainty when investigating anomalous sign-ins is to relate indicators in the activity period to some known threat or scenario, either based on external cyber threat intelligence or incidents faced internally. This aims to give an analyst something concrete to work with, rather than just “weird” or anomalous events.

The general process for performing this is to:

This process is similar to an analysis of competing hypotheses or ACH - which a fantastic generalist methodology for dealing with uncertainty in a slew of cybersecurity applications (more here from the CIA). An example matrix of threats that we routinely look out for at Fortian whilst investigating sign-in alerts has been given below. This isn’t complete (there are too many to go through here) but provides a good starting point as an illustration.

OfficeHome or Microsoft Office application.deviceCodeMicrosoft Authentication Broker application (may indicate PRT phishing)Figure 2: An example suspicious sign-in triage matrix for mapping anomalies to known threats

The “threats and scenarios” detailed in the matrix don’t necessarily need to be malicious events. In fact, it can be beneficial to have a wide-range of hypothesis detailed so that an analyst can come to an equally high-confidence determination of benign as well as malicious activity. For example, an organisation that has a high prevalence of consumer VPN usage may use a scenario called “legitimate VPN usage” and map out indicators that distinguish this activity from malicious scenarios.

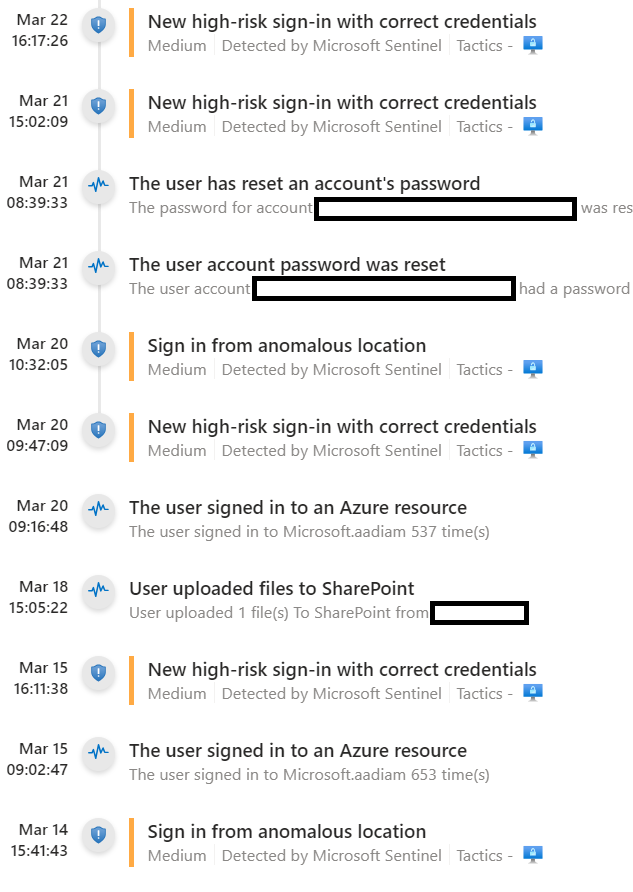

There can be instances where the above analysis falls flat, namely, when collected evidence doesn’t convincingly point toward a single or any known threats. In many instances this is compounded by gaps in telemetry . In this case, all we have to work with is the anomaly itself. The question then turns from “what type of threat does this represent?” to “how likely is this to be some unknown threat?”. We can do this by benchmarking several key properties in the suspicious sign-ins against past organisation and user activity, then use this information to reason about an overall anomaly score enriched with enough context to pull apart “normal weird” from “yikes weird”.

Fortian runs several analytics when investigating sign-in anomalies to determine how anomalous they actually are. Typically these are modelled as true or false statements for simplicities sake. Once these are defined and run consistently when performing triage, an analyst is given an easy point of comparison between past anomalies or incidents. From this, a natural baseline can be formed and used to pull the trigger with confidence on a remediation action.

We found the following analytics work well in general cases of identity compromise in Entra ID:

Highly anomalous

Moderately anomalous

Common anomalies

As an example, an analyst is triaging a suspicious sign-in and has noted that the user usually signs in from their onboarded work laptop from an office IP address in Melbourne. However, the user has now signed in from an offboarded device using a VPN during European work hours. From this, the analyst records the following anomalous properties:

= 1 high, 3 moderate, 2 common

The analyst then compares the computed anomaly score against past incidents and investigations (either from record or based on experience), and notes that the expectation is that there is a larger volume of common anomalies and no high anomalies. Therefore, the analyst can determines that the user’s account is likely to be compromised.

How anomalous each of these features are will naturally vary organisation by organisation - which is more than fine! Adjusting scoring based on business context or adding new properties is good practice so that we don’t repeat the problem we are trying to solve in the first place (E.G if we consistently get 5 high scoring anomalies for every suspicious sign-in, and these get confirmed as benign, then we are right back where we started).

The main issue with this strategy is that it is a bit tedious, with a lot of analysis that needs to be performed on often frequently occurring alerts. Thankfully most of the brunt effort of running the necessary queries can be easily automated away in a SIEM/SOAR platform of choice. We find that defining common investigation patterns such as those above inside of KQL functions works exceptionally well to speed things up and ensure consistency.

So far we’ve gone through two strategies that aim reduce the uncertainty of determining if an account has been compromised given some suspicious sign-in activity. We’re still left with situations where uncertainty simply can’t be reduced to a reasonable degree given the data on hand. This is particularly evident in environments where setting baselines for anomalous activity is exceptionally difficult or there are gaps in monitoring or log data. For example, maybe we don’t have the context about what working hours are, if consumer VPNs are expected, where users are physically located, etc. In many instances this can be inferred from telemetry, but often not. Instead, we can balance the probability that the account is compromised against the potential business impact of responding to the (uncertain) threat.

There are several options available to security operations analysts to deal with threats against Microsoft Entra ID identities that don’t involve going scorched-earth by locking an account, removing MFA methods, nuking tokens, and resetting the password twice. Simple, low-impact actions can be performed without actually needing the full context about what the threat is - just the likelihood that it exists.

The above uncertain response actions are not actually guaranteed to remediate the threat - since the true nature of the threat remains unknown to the analyst. Subsequently, it is critical that when performed that they are recorded and visible for future investigations. Future anomalous sign-in activity against the account could be due to a new threat, or an old uncertain threat not fully remediated (again, we’re playing based on probability at this point). This also means that uncertain actions should absolutely not be relied on as a crutch by analysts and should only be used after it becomes apparent that uncertainty can’t be appropriately reduced through analysis.

Despite it’s critical importance, investigating suspicious sign-in activity can be challenging for security operations analysts due to the complex task of reducing uncertainty. There are however, several simple analytical strategies that can be prepared ahead of time to help analysts come to high-confidence conclusions about the nature of threats against identity:

In the next part of the series “Uncertain Threats Part 2”, we will demonstrate how Bayesian Belief Networks (BNNs) can be used to model uncertainty in security operations investigations.

Stay tuned!

Request a consultation with one of our security specialists today or sign up to receive our monthly newsletter via email.

Get in touch Sign up!